Kodeclik Blog

Removing Duplicates from a Python List

Duplicate values in lists are a common issue in Python programming. Whether you are cleaning data, processing user input, or preparing values for analysis, removing duplicates is often a necessary step.

Python offers several ways to remove duplicates from a list, each with different trade-offs in terms of readability, performance, and whether the original order is preserved. In this post, we’ll walk through the most common techniques, explain how they work, and discuss when to use each one.

Method 1: Use a for loop

The most explicit way to remove duplicates is to iterate through the list and build a new list that only keeps unique elements.

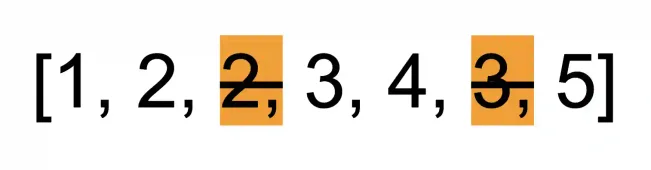

numbers = [1, 2, 2, 3, 4, 3, 5]

unique_numbers = []

for n in numbers:

if n not in unique_numbers:

unique_numbers.append(n)

print(unique_numbers)

This approach works by scanning the original list from left to right and checking whether each element has already been added to the new list. If the element is not present, it is appended; if it has appeared before, it is skipped. Because elements are processed in order, the resulting list preserves the order of first occurrence from the original list.

The output will be:

[1, 2, 3, 4, 5]While this method is very easy to understand and ideal for beginners, it can become inefficient for large lists. Each membership check requires scanning the growing unique_numbers list, which leads to slower performance as the list size increases.

Method 2: Convert List to Dictionary Keys and back to a List

Another elegant way to remove duplicates is to take advantage of the fact that dictionary keys must be unique. In modern versions of Python, dictionaries also preserve insertion order, making them well suited for this task.

numbers = [1, 2, 2, 3, 4, 3, 5]

unique_numbers = list(dict.fromkeys(numbers))

print(unique_numbers)Here, dict.fromkeys(numbers) creates a dictionary where each element of the list becomes a key. Any duplicates are automatically discarded, since keys in a dictionary must be unique. Converting the dictionary’s keys back into a list yields a list of unique values in their original order.

This method is both concise and efficient, and it avoids the repeated membership checks seen in the loop-based approach. It is often considered the most Pythonic solution when order preservation matters and readability is still a priority.

Method 3: Convert List to a Set and back to a List

Similar to the above approach, another way to remove duplicates from a list is to convert it into a set, since sets are designed to hold only unique elements.

numbers = [1, 2, 2, 3, 4, 3, 5]

unique_numbers = list(set(numbers))

print(unique_numbers)When the list is converted into a set, all duplicate values are automatically removed. Converting the set back into a list produces a list containing only unique elements. This method is extremely fast and concise, making it attractive when performance is important.

However, sets do not preserve order. This means the resulting list may not maintain the same order as the original input, and the order may even vary between runs. Because of this, this approach should only be used when the order of elements is unimportant.

Method 4: Use a List Comprehension

Finally, it is also possible to remove duplicates using a list comprehension combined with a set to track previously seen values.

numbers = [1, 2, 2, 3, 4, 3, 5]

seen = set()

unique_numbers = [n for n in numbers if not (n in seen or seen.add(n))]

print(unique_numbers)In this approach, the set seen keeps track of values that have already been encountered. As the list comprehension iterates over the original list, it includes an element only if it has not been seen before. When a new element is included, it is immediately added to the seen set, ensuring duplicates are skipped later.

This method preserves order and is more efficient than repeatedly checking a list for membership. However, it relies on side effects inside the list comprehension, which can make the code harder to read and understand. For that reason, it is best suited for experienced Python programmers who are comfortable with this style.

Applications

Removing duplicates from lists is a common requirement in real-world Python programs. It frequently appears in data cleaning pipelines, where repeated values must be filtered out before analysis. It is also useful when handling user input, processing logs, deduplicating search results, or generating unique identifiers, tags, or categories from raw data.

The choice of method often depends on context. For small scripts or educational settings, clarity may matter more than performance. In data-intensive or production environments, efficiency and correctness with respect to ordering can be more important.

Summary

Python offers several effective ways to remove duplicates from a list, each with its own strengths. Loop-based methods are explicit and easy to follow but can be slow. Dictionary-based approaches provide a concise and efficient solution while preserving order. Set-based conversions are the fastest option when order does not matter. List comprehensions offer a compact and performant alternative but at the cost of readability.

In most practical cases where order matters, converting the list to dictionary keys and back is a clean and reliable choice. When order is irrelevant, using a set is the simplest solution.

Enjoy this blogpost? Want to learn Python with us? Sign up for 1:1 or small group classes.